We are a group of Columbia University researchers and affiliates working on neural networks and their applications. We meet roughly weekly for talks and paper discussions on the following topics:

- Deep/hierarchical/recurrent architectures

- Training/regularization techniques

- Representation learning

- Software tools

All are welcome to participate. Meetings are typically Wednesdays 12.00pm - 1.00pm in CSB 453. (It's the conference room beside the CS lounge in the Mudd building)

For updates and discussion, please join our Google group and our Facebook group.

Upcoming

Past

| 10/26/16 - | Talk: Intelligent Thumbnail Selection [abstract]

Kamil Sindi is the lead data scientist at JW Player focused on machine

learning models in production systems. He will be presenting a

Tensorflow-based model that extracts the "best" frames from a video,

which are then used as auto-generated thumbnails and thumbstrips.

Creating the model used transfer learning on Google's Inceptionv3

model, which was pretrained on ImageNet data and retrained on JW

Player's thumbnail library.

|

| 10/19/16 - | Paper discussion: Unsupervised representation learning with deep convolutional generative adversarial networks [paper] |

| 10/12/16 - | Paper discussion: Convolutional Neural Networks on Graphs with Fast Localized Spectral Filtering [paper] |

| 10/05/16 - | Paper discussion: Toward an Integration of Deep Learning and Neuroscience [paper] |

| 09/28/16 - | Talk: "Personalized engagement prediction at scale" by Nikolai Yakovenko (Twitter) [abstract]

Nikolai Yakovenko is a Columbia graduate, and currently an engineer on Cortex, Twitter's applied AI team focused on deep learning in production systems. He will be presenting a Torch-based system for predicting social media content engagement, given a tuple {tweet, author, consumer}. The overall system is simple, but flexible. Parts of the model include word (and phrase) vectors fed into LSTMs, character-based text models, follow graph embeddings similar to DeepWalk or Node2Vec, and other independently pre-trained components.

|

| 09/21/16 - | Paper discussion: Why does deep and cheap learning work so well? [paper] [slides] |

| 12/16/15 - | Talk: "Deep generative image models using a Laplacian pyramid of adversarial networks" by Emily Denton (NYU) [slides] [notes] [abstract]

We introduce a generative parametric model capable of producing high quality samples of natural images. Our approach uses a cascade of convolutional networks within a Laplacian pyramid framework to generate images in a coarse-to-fine fashion. At each level of the pyramid, a separate generative convnet model is trained using the Generative Adversarial Nets approach. Samples drawn from our model are of significantly higher quality than alternate approaches.

|

| 12/02/15 - | Talk: "Deep Learning for Poker: Inference From Patterns in an Adversarial Environment" by Nikolai Yakovenko (Columbia) [slides] [notes] [abstract]

With the seemingly effortless success of DeepMind (and others) at creating deep learning AIs that learn to master video games, it is not surprising that a similar deep learning system could learn to bet a poker hand, at the level of an experienced human player. However, unlike most video games, the poker AI has to learn a strategy that will not be easily exploitable by an adversarial agent, including an agent that perhaps might have access to 100,000 hands of that AI’s previous games. We quickly present our current system, which we demonstrated can learn different heads-up (one on one) limit poker games, going from zero knowledge to that of a competent human player. Then we would like to talk about adapting these techniques for the more complicated No-Limit Holdem game, in which a network must choose bet sizes, along with whether or not to bet, and must handle an opponent that can also bet against it, in more or less continuous amounts. We are preparing this system for the 2016 Annual Computer Poker Competition, where state of the art academic systems compete in heads-up No Limit Holdem. The winners have previously been based on solving for an approximate Nash equilibrium betting strategy for the poker game. Last year's winner (from Carnegie Mellon University) played well enough to earn a "statistical draw" against some of the best heads-up No Limit Holdem players in the world. Hopefully, our deep learning system can find holes in the equilibrium-seeking system’s game.

|

| 11/18/15 - | Talk: "Deep Clustering: Discriminative Embeddings for Segmentation and Separation" by Zhuo Chen (Columbia) [slides] [notes] [abstract]

We address the problem of "cocktail-party" source separation in a deep learning framework called deep clustering. Previous deep network approaches to separation have shown promising performance in scenarios with a fixed number of sources, each belonging to a distinct signal class, such as speech and noise. However, for arbitrary source classes and number, "class-based" methods are not suitable. Instead, we train a deep network to assign contrastive embedding vectors to each time-frequency region of the spectrogram in order to implicitly predict the target spectrogram segmentation labels, from the input mixtures. This yields a deep network-based analogue to spectral clustering, in that the embeddings form a low-rank approximation to an ideal pairwise affinity matrix, while enabling much faster performance. The objective is also equivalent to k-means', where the segmentation defines the cluster assignments of the embeddings. At test time, a clustering step "decodes" the segmentation implicit in the embeddings by optimizing with respect to the unknown assignments. Preliminary experiments on single-channel mixtures of speech from multiple speakers show that a speaker-independent model trained on two-speaker mixtures can improve signal quality for mixtures of held-out speakers by an average of 6dB. More dramatically, the same model does surprisingly well with three-speaker mixtures.

|

| 11/02/15 - | Talk: "Natural Language Understanding" by Kyunghyun Cho (NYU) [slides] [notes] [abstract]

Neural machine translation is a recently proposed framework for machine translation, which is purely based on neural networks. Neural machine translation radically departs from the existing, widely-used, often phrase-based statistical machine translation by viewing the task of machine translation as a supervised, structured output prediction problem and solving it with recurrent neural networks. In this talk, I will describe in detail what neural machine translation is and discuss recent advances which have made it possible for neural machine translation system to be competitive with the conventional statistical approach. I will conclude the talk with a big question: is natural language special?

|

| 10/21/15 - | Talk: "Musical Data Augmentation" by Brian McFee (NYU) [slides] [notes] [abstract]

Predictive models for music annotation tasks are practically limited by a paucity of well-annotated training data. In the broader context of large-scale machine learning, the concept of "data augmentation" - supplementing a training set with carefully perturbed samples - has emerged as an important component of robust systems. In this work, we develop a general software framework for augmenting annotated musical data sets, which will allow practitioners to easily expand training sets with musically motivated perturbations of both audio and annotations. As a proof of concept, we investigate the effects of data augmentation on the task of recognizing instruments in mixed signals.

|

| 10/14/15 - | Talk: "Exploring How Deep Neural Networks Form Phonemic Categories" by Tasha Nagamine (Columbia) [slides] [notes] [abstract]

Deep neural networks (DNNs) have become the dominant technique for acoustic-phonetic modeling due to their markedly improved performance over other models. Despite this, little is understood about the computation they implement in creating phonemic categories from highly variable acoustic signals. In this paper, we analyzed a DNN trained for phoneme recognition and characterized its representational properties, both at the single node and population level in each layer. At the single node level, we found strong selectivity to distinct phonetic features in all layers. Node selectivity to specific manners and places of articulation appeared from the first hidden layer and became more explicit in deeper layers. Furthermore, we found that nodes with similar phonetic feature selectivity were differentially activated to different exemplars of these features. Thus, each node becomes tuned to a particular acoustic manifestation of the same feature, providing an effective representational basis for the formation of invariant phonemic categories. This study reveals that phonetic features organize the activations in different layers of a DNN, a result that mirrors the recent findings of feature encoding in the human auditory system. These insights may provide better understanding of the limitations of current models, leading to new strategies to improve their performance.

|

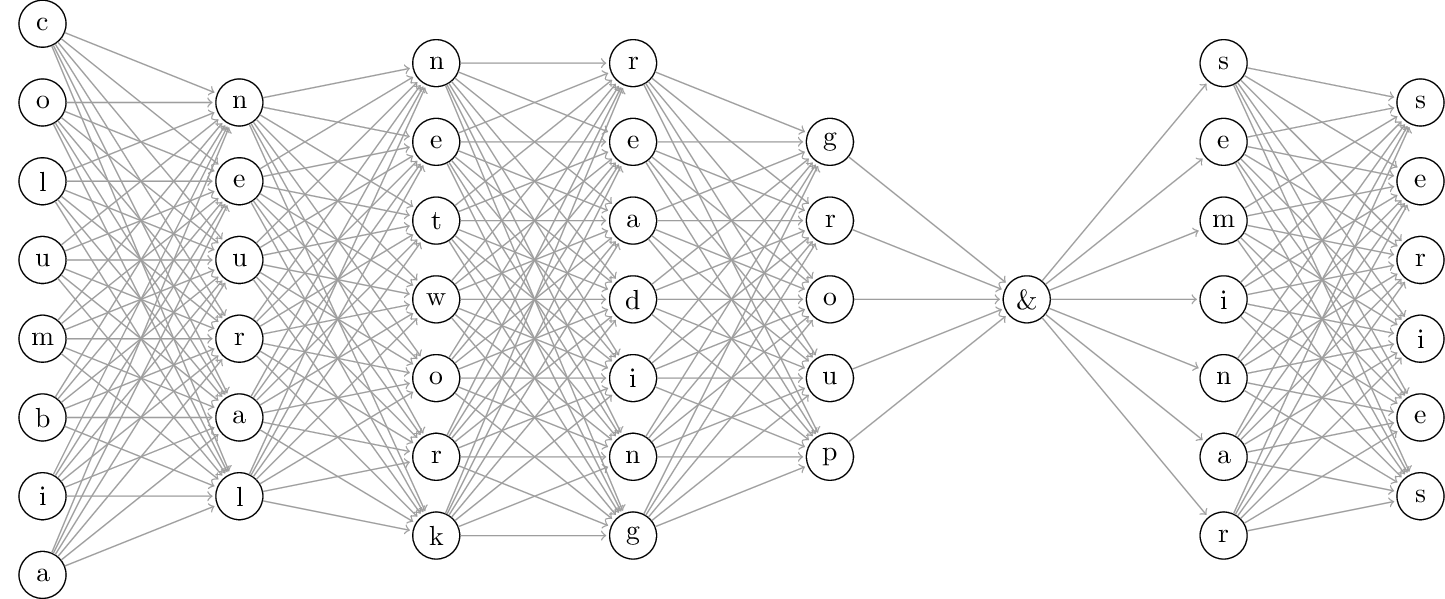

| 09/23/15 - | Talk: "Neural Machine Translation (NMT)" by Bart van Merriënboer (University of Montreal/Facebook AI Research) [notes] [abstract]

The field of machine translation has been dominated for years by n-gram based statistical machine translation models. In 2014 Google and MILA (the Montreal deep learning group) simultaneously introduced end-to-end neural network models to do translation. At WMT15 MILA set the state of the art for English to German translation. I'll talk briefly about traditional statistical machine translation, and how NMT improves on these methods. I'll highlight the differences between Google's sequence-to-sequence models and Montreal's attention-based mechanisms, briefly drawing a parallel to other attention based models such as Jason Weston's memory networks. I'll illustrate the practical implementation of these models with a brief overview and some code snippet from Fuel and Blocks, the data processing and deep learning libraries developed at MILA.

|

| 09/16/15 - | Talk: "Towards End-To-End Speech Recognition" by Tara Sainath (Google) [slides] [notes] [abstract]

In this talk, I will discuss various efforts in our group at Google towards replacing various parts of the acoustic modeling pipeline with neural networks. First, I will describe a new modeling approach known as Convolutional, Long Short-Term Memory, Deep Neural Networks (CLDNNs), and why this architecture makes sense for speech tasks. Next, I will talk about using CLDNNs for raw-waveform modeling, allowing us to remove front-end log-mel filterbank feature computation. Finally, I will discuss CTC, which allows us to remove the need for a prior alignment and CD states.

|

| 09/08/15 - | Talk: "Analysis-by-Synthesis for Source Separation and Speech Recognition" by Michael Mandel (Brooklyn College) [slides] [notes] [abstract]

Separating speech from noise with a single microphone is a very underdetermined task, requiring a strong model of speech to be successful. This talk will present two such models, the first combines neural networks with exemplar based approaches for speech separation and recognition and the second provides a novel method for linking noise suppression using spectral masks with speech recognition using cepstral-domain features. The first system aims to reconstruct damaged or obscured speech using a concatenative speech synthesizer. This synthesizer is driven by a deep neural network-based selection function that predicts the similarity between pairs of noisy and clean speech "chunks". On the small-vocabulary CHiME2-GRID corpus, the resulting noise-free syntheses have speech quality much higher than similar approaches, almost as high as the original clean speech, but with lower intelligibility. The second system uses a full large vocabulary continuous speech recognition system as a structured prior model of speech and poses the estimation of the mel frequency cepstral coefficients (MFCCs) of the clean speech as an optimization problem. It thus finds the clean MFCCs that minimize a combination of the distance to reliable regions of the noisy observation and the negative log likelihood under the recognizer. This approach reduces speech recognition errors on the medium vocabulary AURORA4 task.

|

| 07/28/15 - | Talk: "Conditional and Recurrent Variational Inference" by Kyle Kastner (Univeristy of Montreal) [slides] [notes] [abstract]

Variational inference is a general method for learning a model from data. By encoding our assumptions about the data we wish to model, it is possible to learn structured latent variable models that encode our high-level assumptions in the form of conditional relationships. Recent advances in variational inference have vastly improved the efficiency of such models, allowing for interesting advances in modeling complex input such as images and timeseries. I will talk about what variational inference is, how it is applied in modern neural networks, a (brief) introduction to recurrent neural networks, and how to leverage this framework to learn structured models with latent variables over time.

|

| 07/14/15 - | Tutorial: currennt by Zhuo Chen (Columbia) |

| 07/01/15 - | Talk: "Complex-Valued Neural Network Experiments" by Andy Sarroff (Dartmouth) [notes] |

| 03/25/15 - | Paper discussion: Neural Turing Machines [paper] |

| 03/04/15 - | Paper discussion: Recently proposed stochastic optimization techniques, including adagrad, rmsprop, adam, and adadelta [notes] |

| 02/18/15 - | Talk: "Learning to manipulate symbols" by Wojciech Zaremba (NYU, Facebook, Google) [slides] [notes] [abstract]

Machine learning approaches have proven highly effective for statistical pattern recognition problems, such as those encountered in speech or vision. However, contemporary statistical models barely deal with high level reasoning problems, and symbolic manipulation. I consider two tasks, which require such skills (1) finding mathematical identities, and (2) evaluating computer programs.

We explore how learning can be applied to the discovery of mathematical identities. Specifically, we propose methods for finding computationally efficient versions of a given target expression. That is, finding a new expression which computes an identical result to the target, but has a lower complexity (in time and/or space). ref.: "Learning to Discover Efficient Mathematical Identities" Execution of computer programs requires dealing with multiple nontrivial concepts. To execute a program, a system has to understand numerical operations, the branching of if-statements, the assignments of variables, the compositionality of operations, and many more. We show that Recurrent Neural Networks (RNN) with Long-Short Term Memory (LSTM) units can accurately evaluate short simple programs. The LSTM reads the program character-by-character and computes the program's output. ref.: "Learning to Execute" |

| 02/11/15 - | Paper discussion: "Learning to Execute" by Wojciech Zaremba and Ilya Sutskever [paper] [notes] |

| 01/28/15 - | Tutorial: Lasagne [slides] [code] |

| 12/17/14 - | Talk: "Kernels vs. DNNs for Speech Recognition" by Avner May (Columbia University)[slides] [abstract]

In this talk, I'll discuss recent work to scale kernel methods to take on large-scale problems in speech recognition, on which deep neural networks have been prevailing. In order to overcome the typical N^2 limitation of kernel methods, we approximate kernel functions with features derived from random projections. Furthermore, we present new schemes for combining kernel functions as a way of learning representations. We validate our approaches with extensive experiments on real-world speech datasets on the task of acoustic modeling. We show that our kernel models are competitive with well-engineered deep neural networks (DNNs) on these problems.

|

| 12/03/14 - | Talk: "From Scattering to Spectral Networks" by Joan Bruna (Facebook AI Research, UC Berkeley) [slides] [notes] [abstract]

Object and Texture recognition require extracting stable, discriminative information out of noisy, high-dimensional signals. Our perception of image and audio patterns is invariant to several transformations, such as illumination changes, translations or frequency transpositions, as well as small geometrical perturbations. Similarly, textures are examples of stationary, non-gaussian, intermittent processes which can be recognized from few realizations.

Scattering operators construct a non-linear signal representation by cascading wavelet modulus decompositions, shown to be stable to geometric deformations, and capturing key geometrical properties and high-order moments with low-variance estimators. Thanks to these properties, the resulting representation is very efficient on several recognition and reconstruction tasks. Moreover, scattering moments provide an alternative theory of multifractal analysis, where intermittency and self-similarity can be consistently estimated from few realizations. Although stability to geometric perturbations is necessary, it is not sufficient for the most challenging object recognition tasks, which require learning the invariance from data. We shall discuss how scattering operators can be generalized to this scenario with spectral networks, highlighting the close links between structured dictionary learning, deep neural networks and spectral graph theory. |

| 11/19/14 - | Paper discussion: "Invariant Scattering Convolutional Networks" by Joan Bruna and Stephane Mallat [paper] and "Spectral Networks and Deep Locally Connected Networks on Graphs" by Joan Bruna et al. [paper] |

| 11/12/14 - | Talk: "Long-Short-Term Memory Recurrent Neural Networks for acoustic modelling in continuous speech recognition" by Andrew Senior (Google) [slides] [notes] [abstract]

Our recent work has shown that deep Long Short-Term Memory Recurrent Neural Networks (LSTM-RNNs) give improved accuracy over deep neural networks for large vocabulary continuous speech recognition. I will describe our task of recognizing speech from Google Now in dozens of languages and give an overview of LSTM-RNNs for acoustic modelling. I will describe distributed Asynchronous Stochastic Gradient Descent training of LSTMs on clusters of hundreds of machines, and show improved results through sequence-discriminative training.

|

| 11/05/14 - | Tutorial: Chapter 3 from "Neural Networks and Deep Learning" by Michael Nielsen [paper] [notes] |

| 10/15/14 - | Tutorial: Theano [slides] [code] |

| 10/08/14 - | Talk: "Expectation Backpropagation" by Daniel Soudry (Columbia University) [slides] [notes] [abstract]

Multilayer Neural Networks (MNNs) are commonly trained using gradient descent-based methods, such as BackPropagation (BP). Inference in probabilistic graphical models is often done using variational Bayes methods, such as Expectation Propagation (EP). We show how an EP based approach can also be used to train deterministic MNNs. Specifically, we approximate the posterior of the weights given the data using a “mean-field” factorized distribution, in an online setting. Using online EP and the central limit theorem we find an analytical approximation to the Bayes update of this posterior, as well as the resulting Bayes estimates of the weights and outputs.

Despite a different origin, the resulting algorithm, Expectation BackPropagation (EBP), is very similar to BP in form and efficiency. However, it has several additional advantages: (1) Training is parameter-free, given initial conditions (prior) and the MNN architecture. This is useful for large-scale problems, where parameter tuning is a major challenge. (2) The weights can be restricted to have discrete values. This is especially useful for implementing trained MNNs in precision limited hardware chips, thus improving their speed and energy efficiency by several orders of magnitude. We test the EBP algorithm numerically in eight binary text classification tasks. In all tasks, EBP outperforms: (1) standard BP with the optimal constant learning rate (2) previously reported state of the art. Interestingly, EBP-trained MNNs with binary weights usually perform better than MNNs with real weights - if we average the MNN output using the inferred posterior. |

| 09/30/14 - | Tutorial: Chapter 2 from "Neural Networks and Deep Learning" by Michael Nielsen [paper] [notes] |

| 09/24/14 - | Paper discussion: "Fast Dropout Training" by Sida Wang and Christopher Manning [paper] and "Maxout Networks" by Ian Goodfellow et al. [paper] [notes] |

| 09/17/14 - | Tutorial: Chapter 1 from "Neural Networks and Deep Learning" by Michael Nielsen [paper] [notes] |

| 09/10/14 - | Fall semester planning, optional tutorial on chapters 2 and 3 from "Deep Learning" by Yoshua Bengio et al. [paper] |

| 07/23/14 - | Talk: "Deep learning and feature learning for music information retrieval" by Sander Dieleman (Ghent University, Spotify) [slides] [notes] [abstract]

Deep learning has become a very popular approach for solving speech recognition and computer vision problems in recent years. In this talk we'll explore how deep learning and feature learning techniques can be used for music information retrieval (MIR) problems. A few different approaches to learn features from audio signals will be discussed, including unsupervised feature learning using the spherical K-means algorithm and supervised feature learning using convolutional neural networks. We'll evaluate the utility of the learned features in the context of genre classification, automatic tagging and content-based music recommendation.

|

| 07/09/14 - | Paper discussion: "Practical recommendations for gradient-based training of deep architectures" by Yoshua Bengio [paper] [notes] |

| 06/25/14 - | Tutorial: Theano [slides] [code] |

Current Organizers

Oscar Chang {firstname.lastname} at cs.columbia.edu

Chad DeChant {lastname} at cs.columbia.edu

Harvey Wu {lastname.firstname} at cs.columbia.edu

Neil Chen {firstname.lastname} at cs.columbia.edu

Past Organizers

Colin Raffel (founder)