We're working with speech recognition on some very noisy speech files that were apparently recorded by a mic some distance from the speaker in a noisy office environment.

One special tool we have at our disposal is a trained, noise-robust pitch tracker derived from the RATS data, which consisted of pairs of files, a clean version, and badly corrupted version that had been transmitted over a variety of noisy radio links. We used these to train our Subband Autoccorrelation Classification (SAcC) pitch tracker which does well in conditions much too noisy for conventional explicit pitch trackers. Informally, it seems that the RATS-trained pitch tracker does well on our noisy Babel data.

This could be used in several places (e.g., for improved voice activity detection), but one idea is to try to enhance speech by filtering out just the harmonics corresponding to the "known" pitch.

I've been thinking about how to do this ever since Avery Wang did it to separate vocals from accompaniment at CCRMA in 1996. Spectrogram masking is a bit too crude and messy; his technique was to "heterodyne" each harmonic down to dc, then to use a low-pass filter to extract the amplitude, then remodulate it back to its original position.

Of course, a comb filter picks out all the harmonics in a single stroke, but it needs everything to be at a constant pitch. Rather than trying to demodulate each harmonic, my idea is to use time-varying resampling ("varispeed") to pitch-shift the target using the known pitch at each time so that the target pitch becomes constant. Then you can filter, and, in theory, un-resample to get back the original signal, now with the gaps between the harmonics "dug out".

The problem was that I'd always gotten tied up trying to make sense of how to undo the time resampling, since it's a confusing operation. But for the ehist project on energy histogram equalization, I ended up implementing piecewise linear mappings that are exactly invertible, and which thus gave me the piece I needed for the pitch-flattening.

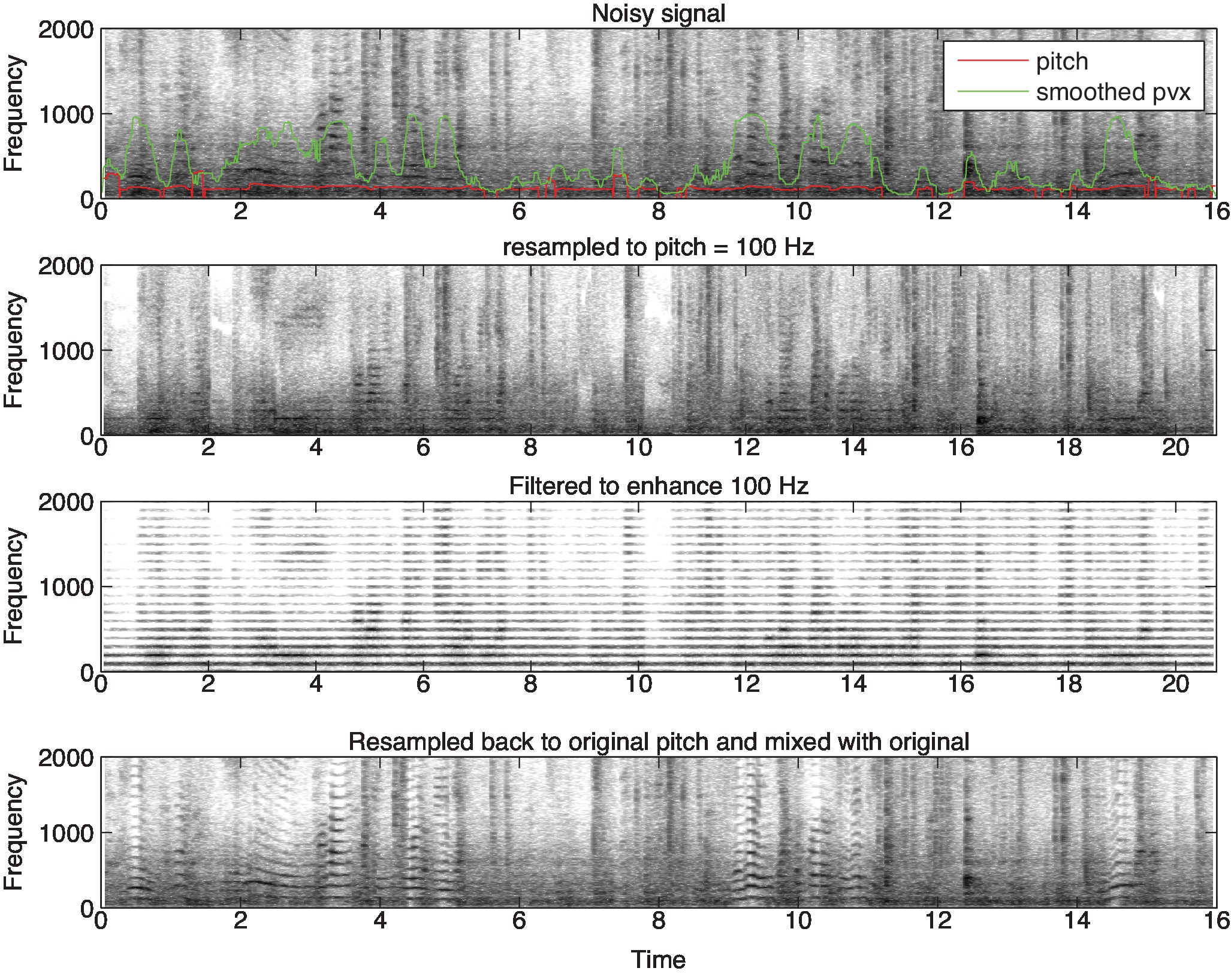

The figure below a noisy OP1_204 test fragment (I started with the Wiener-filtered output), along with the pitch track and prob(vx) from SAcC-RATS. I tweaked the prior on voicing to make it guess at a pitch even when the evidence was weak, and I smoothed the prob(vx) with a 200ms median filter.

The next pane is that signal with time-varying resampling to flatten the inferred pitch track to 100 Hz. Then we put it through a comb filter to remove noise in-between the harmonics (third pane).

The final pane shows this cleaned signal un-resampled, to get back the original timebase. I also cross-fade between the pitch-filtered version and the original unprocessed signal using the smoothed p(vx) as the weighting.

This all works, I think. Unfortunately, it doesn't sound that good (yet?). But I think it's the basis of a really nice story - if only we can get some kind of ASR gain out of it.

The Matlab code for this is at https://github.com/dpwe/pitchfilter . The figure below is created within the pitchfilter command, e.g.

>> [d,sr] = wavread('nr1ex_wiener-8k.wav');

>> y = pitchfilter(d,sr);

>> wavwrite(y, sr, 'nr1ex_w+pitchfilt.wav');