User Tools

Table of Contents

Brain Beats: Tempo Tracking in EEG Data

| Authors | Sebastian Stober, Thomas Prätzlich, Meinard Müller |

| Affiliation | University of Western Ontario; International Audio Laboratories Erlangen |

| Code | brainbeats.zip |

| Dependencies | OpenMIIR Dataset (Github) |

| deepthought project (Github) for data export | |

| Tempogram Toolbox |

Opening Question

Can we track the beat or the tempo of a music piece in brain waves recorded during listening? This is the question we tackled on the hack day at ISMIR 2015.

Data

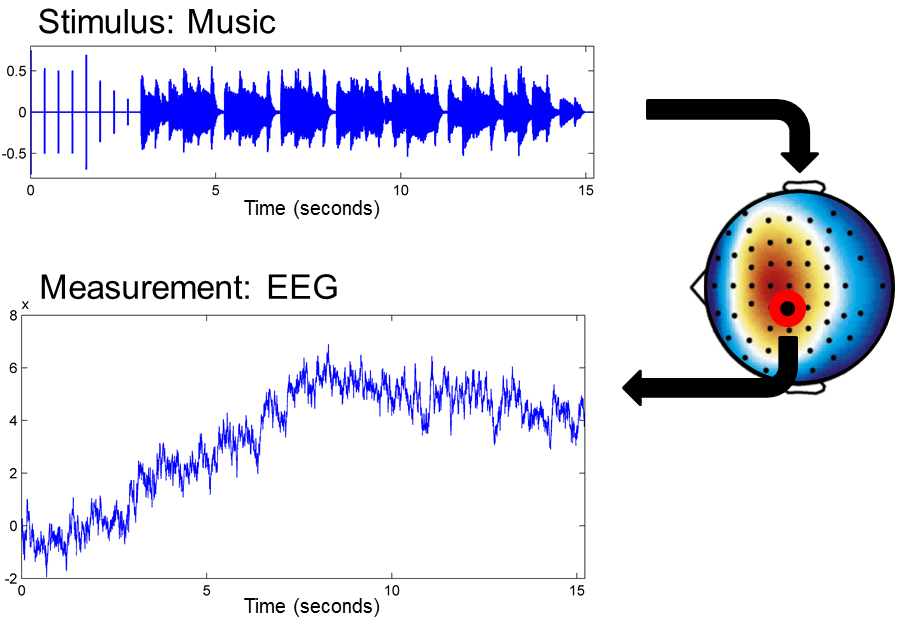

The OpenMIIR dataset1) comprises EEG recordings2) of people listening to 12 short music pieces. The following figure shows the waveform of the acoustic stimulus (waveform of a music recording) and the EEG signal.

Problem Specification

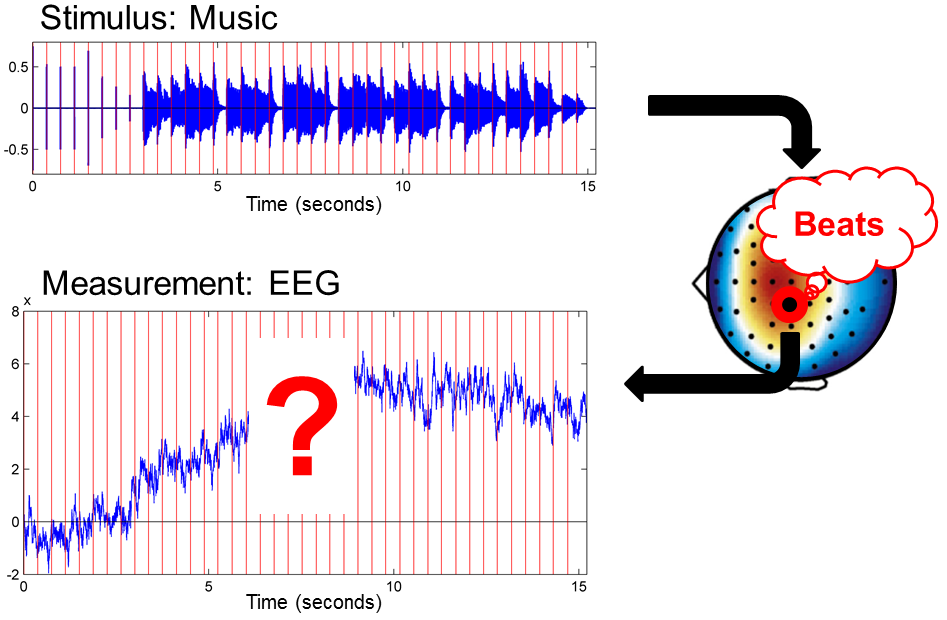

We wanted to know whether it is possible to track the tempo in the EEG signal just as this would be done for audio data.

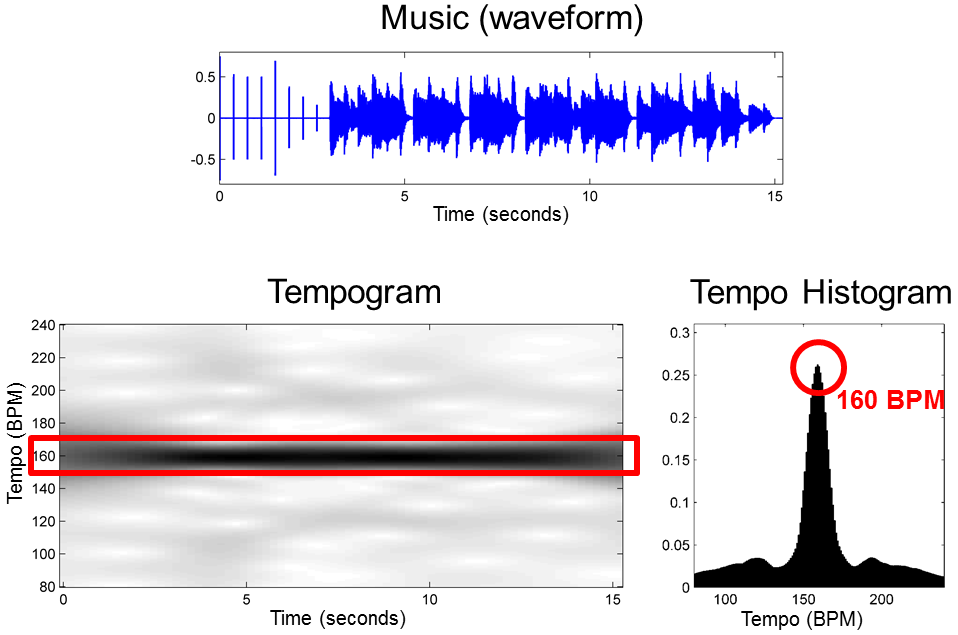

Tempogram for Music Recording

Using the Tempogram Toolbox3), we converted the music recording into a time-tempo representation (tempogram). Aggregating the time-tempo information over the time, we derived from the tempogram a tempo histogram. As shown by the following figure, this results reveals the correct tempo.

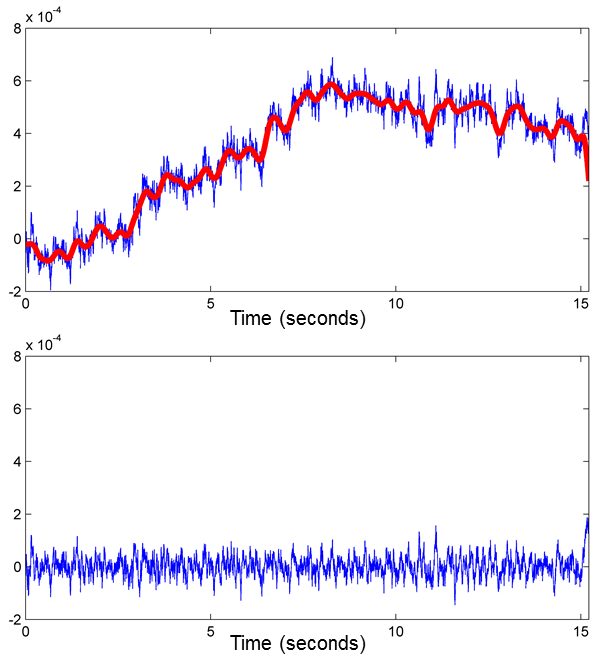

Pre-Processing of EEG data

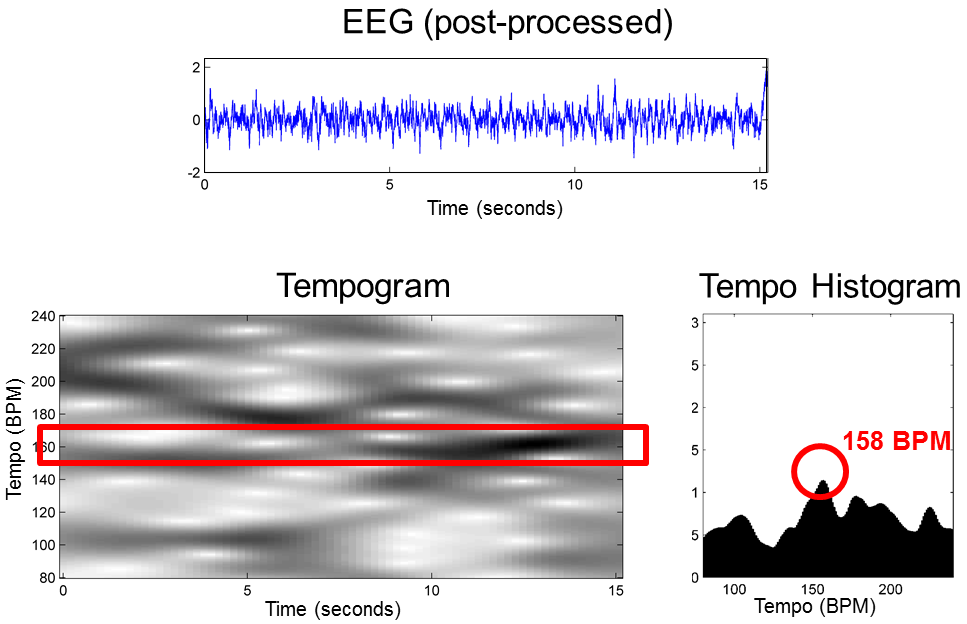

Our idea is to apply similar techniques to the EEG data. However, because the EEG is very noisy, we applied some pre-processing. First, we aggregated several EEG channels into one signal. Then, we applied a suitable high-pass filter and normalized the signal by subtracting a kind of moving average curve.

Tempogram for EEG data

The resulting signal is then used as a kind of novelty curve. We then applied the same tempo estimation techniques as for the music case. The resulting EEG tempogram and EEG tempo histogram is shown by the following figure.

Resources

The MATLAB code and the data used for generating the above figures can be found here.

The algorithmic details are described in the original article by Grosche and Müller4) and in the textbook Fundamentals of Music Processing5).