Table of Contents

DeepDreamEffect

| Authors | Christian Dittmar |

| Affiliation | International Audio Laboratories Erlangen |

| christian.dittmar@audiolabs-erlangen.de | |

| code | https://github.com/stefan-balke/hamr2015-deepdreameffect |

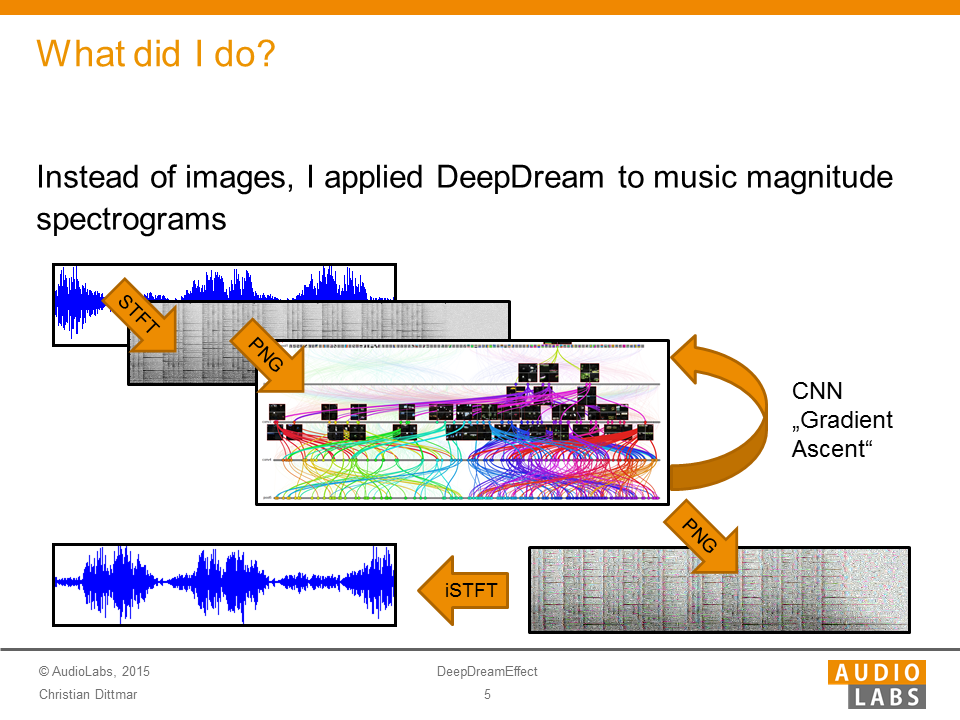

What did I do

I used Google's DeepDream processing as an audio effect. Therefore, I export music magnitude spectrogram as RGB channels of PNG images and apply so-called 'Gradient Ascent' with pre-trained networks to these images. Afterwards, I convert the resulting images to spectrograms again and resynthesize them using Griffin and Lim's method.

Since the networks were trained on natural images, this makes no sense musically. However, it gives interesting results:

Example 1: Piano

Input signal Result using layer conv3 (MIT places network) Result using layer pool5 (MIT places network)

Example 2: Ethno

Input signal Result using layer conv3 (MIT places network)

Example 3: Breakbeat

Input signal (Different drums encoded as RGB) Result using layer conv3 (MIT places network)

Libraries Used

Anaconda Python Package Caffe Deep Deep Learning Framework Pre-Trained Networks iPython Notebook MATLAB