User Tools

Table of Contents

LSTM Library Benchmarks

| Authors | Colin Raffel |

| Affiliation | Columbia University |

| Code | nntools github experiment github |

I wrote a new Long-Short Term Memory implementation in Theano and benchmarked it against two existing libraries. The results on this page for Theano/nntools are now out-of-date; the most up-to-date results are available in the experiment github.

Experiment

I compared the following three libraries;

I ran the CHiME noisy speech recognition experiment (included with CURRENNT). The parameterization used was the same as the default parameters supplied with CURRENNT. However, RNNLIB doesn't seem to be able to use normally-distributed weight initialization, so I also ran CURRENNT with a uniform weight distribution. However, CURRENNT can't randomly initialize biases, so the training dynamics are somewhat different.

Results

The average time per epoch follows:

- CURRENNT: 32 seconds

- RNNLIB: 740 seconds

- rnntools: 813.75 seconds

CURRENNT is the clear winner, probably because it processes many sequences in parallel. For more details, see the paper which describes its implementation 1).

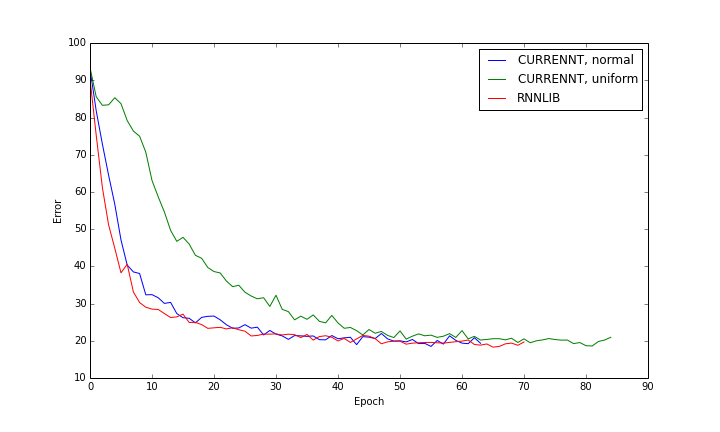

As mentioned above, the training dynamics were different for each system because the intialization was not the same. The validation error at each epoch is plotted below for CURRENNT (both uniform and normal initializations) and RNNLIB is shown below. rnntools isn't included because it didn't finish training in time.