User Tools

Table of Contents

Dynamical Remixing System based on User Intention

Authors

Ping-Keng Jao (from Academia Sinica)

Steven Tsai (from National Taiwan University)

Bertram Liu (from National Chiao Tung University)

Chia-Hao Chung (from National Taiwan University)

Yi-Wei Chen (from National Taiwan University)

The idea

When listening to a music recording, sometimes the user would like to focus on a specific part, the violin or the bassoon for example. Therefore, this project aims to propose a framework that could dynamically (in real-time) predict the intention of user. If the user intends to focus on violin, we attenuate or just turn off the other part. We want to build an automatic system so the user can simply focus on listening rather than tuning by hand.

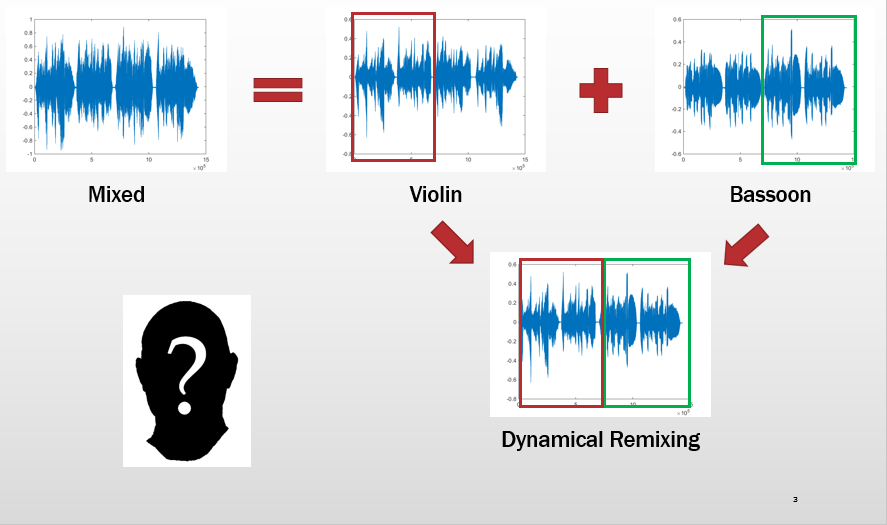

The figure below is a simple illustration. Assume we have a wave mixed of a violin and a bassoon, and the user focuses on listening to the violin for first half recording and the bassoon for the latter half. We want to extract the intention of user during his/her listening and re-mix the violin and the bassoon according to the intention. In this case, the ideal output would be only violin for first half of recording and only bassoon for later half.

The system overview

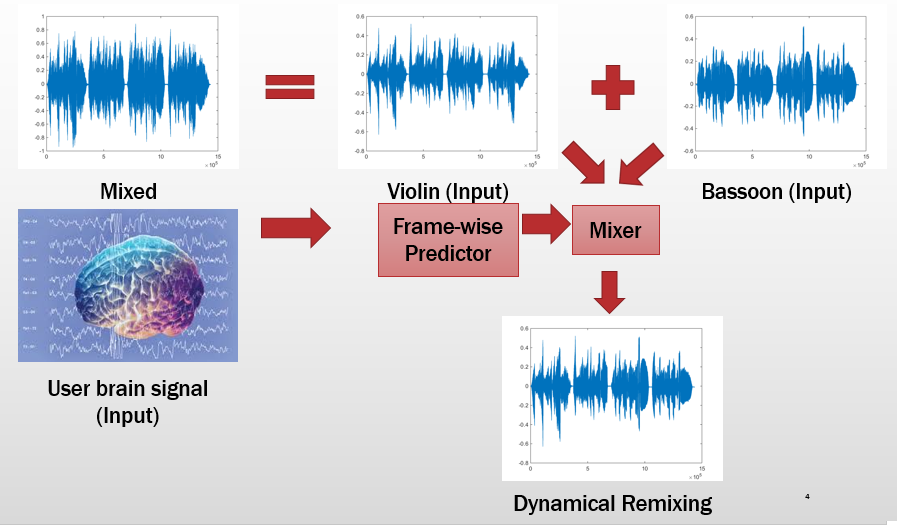

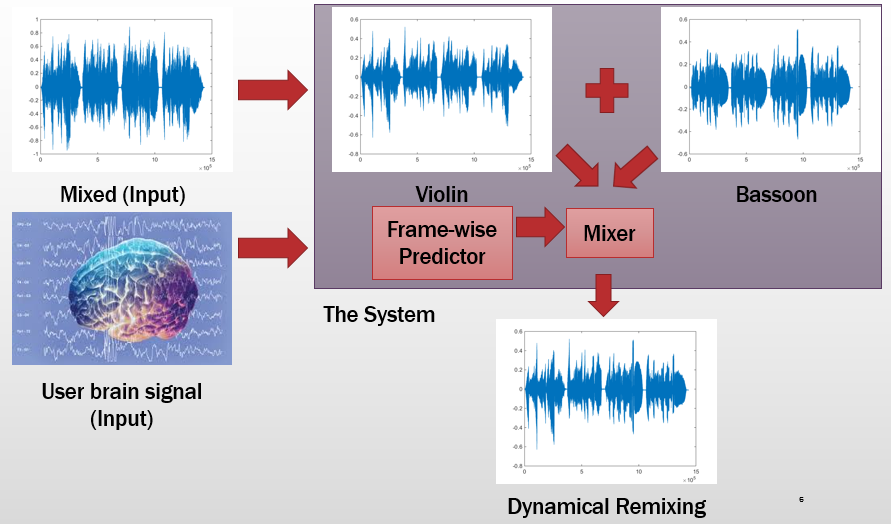

To build an automatic system, we simply extracted EEG signal from the user and extract common brain waves for each electrode. This result in total 84-dim feature vector for a given time index. Then, we apply some normalization techniques and feed the feature vector to linear kernel support vector machine (SVM) for training and testing.

With the predicted label from the SVM, we remixed the sound track from the violin and the bassoon1). In the remixing system, we performed some moving average algorithm on labels, and the averaged labels are used to adjust the mixing weight of the violin and the bassoon, in which we also performed some moving average algorithms for the weighting.

Dataset

Audio:

The 7th, 8th, 9th, and 10th track from the Bach10 Dataset. Only used the violin and the bassoon

Brain signal:

Recorded on the first day of this HAMR. Subject: Steven Tsai. Equipment: Emotiv EEG headset.

Scenario: The subject is ask to carefully listen to either the violin or the bassoon, so in total, there will be 4 songs * 2 cases = 8 trials. Please notice that the user is NOT asked to think that “I am listening to the violin or bassoon,” but use the ears to track the target instrument.

Validaton:

We cheated, trained and tested on all collected data.

Result

Accuracy

7th, violin: 0.7438 7th, bassoon: 0.6016 8th, violin: 0.6799 8th, bassoon: 0.5959 9th, violin: 0.7150 9th, bassoon: 0.6802 10th, violin: 0.6848 10th, bassoon: 0.8239 overall: 0.6906

Remixed waves

You can find it on the Google Drive here. Odd number should output with the violin only and even number is the bassoon.

Future work

The idea future is creating a real-time source separation system and a real-time high accuracy intention predictor. Then, combine both part for a dynamical remixing system based on user intention.