Table of Contents

iNtelligent Audio Switch Box

About

iNASB, short for iNtelligent Audio Split Box, and at the same time an acronym from the first letters of the creators' first names (Nikolay, Artyom, Simon-Claudius and Brecht), is a VST or AudioUnit plugin that routes an audio signal to one channel or another, based on the characteristics of the signal. It automatically learns what channel to switch to during a training stage, when the audio to be associated with the given channel is recorded. The plugin is ready to use after both channels have some audio associated with them.

An example usage is the augmentation of an instrument performance by providing control over any kind of audio effect by parameters embedded in the performance itself, such as pitch, level, or timbral features. E.g. a guitarist who desires harmonic distortion only on the higher notes, a vocalist who wants a long echo on the really loud hits, or a trombone that needs a flanger on slides between notes. It can also be used in less creative, more functional ways to control level, dynamics or spectrum.

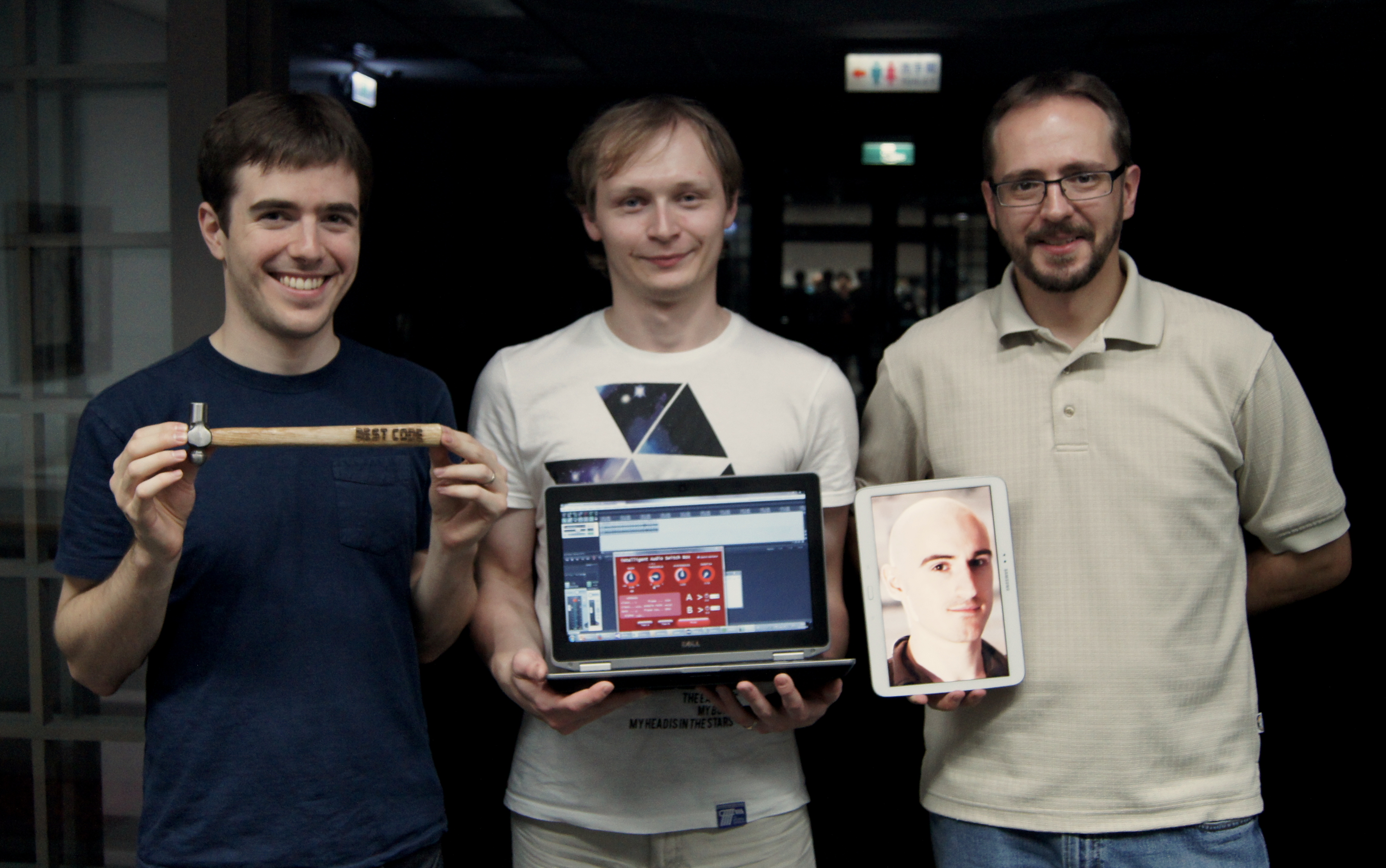

The Team

* Brecht De Man PhD student at Queen Mary University of London, United Kingdom

* Nikolay Glazyrin Developer for Yandex, Russia

* Tom (Artyom) Arjannikov Master student at University of Lethbridge, Canada

* Simon-Claudius Wystrach (remote) Master student at University of York, United Kingdom

Features

Plugin

Parameters

GAIN

- Amplification level of the input signal.

THRESHOLD

- The value of discrimination between channel A and B.

HYSTERESIS

- A noise gate that perpetuates the current state

INERTIA

- Determines how fast to apply parameter changes, for example, a high inertia setting will create a sweeping effect.

Learning

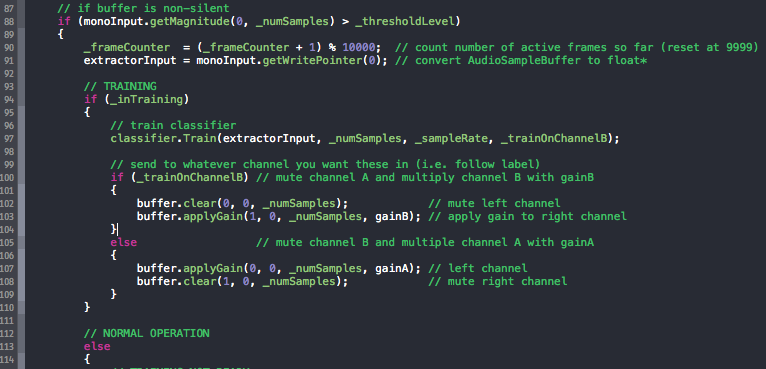

During the learning stage, four spectral features (centroid, flatness, crest and roll-off) are extracted from training examples. They are associated with one of the channels and stored in memory. The user would toggle “Train A” or “Train B” button and then produce sound for the plugin to learn from. The “Finish” button completes the training stage, and the plugin becomes ready to route the signal.

Playback

During the playback mode an algorithm finds the training example(s) nearest to the features extracted real-time from sound and the corresponding class routes the sound signal to the appropriate channel.

Implementation

Guidelines

First off, know that Channel A corresponds with 0, with false and with left, and Channel B corresponds with 1, with true and with right (depending on the context: JUCE's channel number is 0 or 1, which corresponds to left and right in a traditional setup in a Digital Audio Workstation (DAW), while we are using booleans to toggle between the two channels).

Tools

We use the JUCE toolkit for building VST/AU plugins. If you're on Mac OS X, see this page to fix some Core Audio related issues with XCode first. If you're on Windows, it is advised that you use Visual Studio to manipulate the code, as JUCE enables easy setup for a select set of IDEs.

You will need Steinberg's VST SDK (2.4 for standard VST plugins, although 3.0 also includes the former).

Finally, when using JUCE life will be significantly easier if you use one of the IDEs it supports, i.e. any Visual Studio version for Windows, XCode for Mac, and CodeBlocks (but less fussy) for Linux.

Distribution

To be released - stay tuned!